Web crawler tools are essential for modern SEO strategies. As businesses aim to enhance their online presence, understanding how these tools function is vital. A web crawler, often referred to as a spider or bot, systematically explores the internet to gather data for search engines. This process plays a critical role in indexing websites and ensuring they rank well in search engine results.

Imagine the internet as a massive library where every webpage represents a book. Without proper organization, finding the right information would be an overwhelming task. This is where web crawler tools step in. They act as skilled librarians, meticulously organizing content to make it easily accessible to users. By utilizing these tools, businesses can significantly improve their search engine rankings and attract more visitors to their websites.

In this detailed guide, we will explore the world of web crawler tools. From understanding their functionalities and benefits to learning best practices, this article will equip you with the knowledge needed to harness the power of web crawlers effectively. Whether you're new to SEO or an experienced digital marketer, this guide will provide valuable insights.

Read also:Zoolander 3 The Ultimate Guide To The Hilarious Fashion World Adventure

Table of Contents

- Introduction to Web Crawler Tools

- How Web Crawler Tools Work

- Benefits of Using Web Crawler Tools

- Types of Web Crawlers

- Top Web Crawler Tools

- Web Crawlers and SEO

- Best Practices for Using Web Crawlers

- Data Privacy and Ethical Considerations

- The Future of Web Crawlers

- Conclusion

Introduction to Web Crawler Tools

What Are Web Crawler Tools?

Web crawler tools are advanced programs designed to systematically navigate the internet, collecting data and indexing web pages. These tools are indispensable for search engines like Google, Bing, and Yahoo, as they enable them to maintain up-to-date databases of web content. By continuously crawling websites, these tools ensure that search engines provide the most relevant results to users' queries.

The core function of web crawler tools involves following hyperlinks from one webpage to another, gathering information as they go. This data is then processed and stored in an index, which search engines use to rank websites based on relevance and quality. Understanding the mechanics of these tools can greatly enhance your SEO efforts and improve your website's visibility in search results.

How Web Crawler Tools Work

Understanding the Crawling Process

The crawling process involves several crucial steps that ensure efficient data collection. Initially, web crawlers start with a list of URLs, commonly referred to as a seed set. They then follow the links on these pages to discover new URLs, creating an extensive network of interconnected web pages. As they crawl, they store the content of each page in a database for further analysis.

- URL Discovery: Crawlers identify new URLs by following hyperlinks on web pages, expanding their reach.

- Content Extraction: They extract relevant data from web pages, including text, images, and metadata, to provide comprehensive insights.

- Data Storage: The collected data is stored in an index, which search engines use to rank websites based on relevance and quality.

This systematic approach ensures that search engines have access to the latest and most relevant content, enhancing the overall user experience.

Benefits of Using Web Crawler Tools

Enhancing SEO Performance

One of the primary advantages of web crawler tools is their ability to significantly improve SEO performance. By analyzing how search engines crawl and index websites, businesses can optimize their content for better visibility. This includes ensuring that all pages are easily accessible, meta tags are properly configured, and content is structured to facilitate optimal crawling.

Moreover, web crawlers can help identify technical issues that may impede search engine indexing, such as broken links, duplicate content, and slow load times. Addressing these issues can lead to improved search rankings and increased organic traffic, ultimately benefiting your website's overall performance.

Read also:Michael Caines The Culinary Maestro Redefining Fine Dining

Types of Web Crawlers

General vs Specialized Crawlers

Web crawlers can be broadly classified into two categories: general crawlers and specialized crawlers. General crawlers, like those used by major search engines, aim to index as much of the web as possible. They are designed to handle a wide variety of content types and follow a broad range of links, ensuring comprehensive coverage.

Specialized crawlers, on the other hand, focus on specific types of content or websites. These crawlers are often employed by niche search engines or businesses seeking to gather data for specific purposes. For instance, a specialized crawler might concentrate on collecting job listings or real estate information, providing targeted insights.

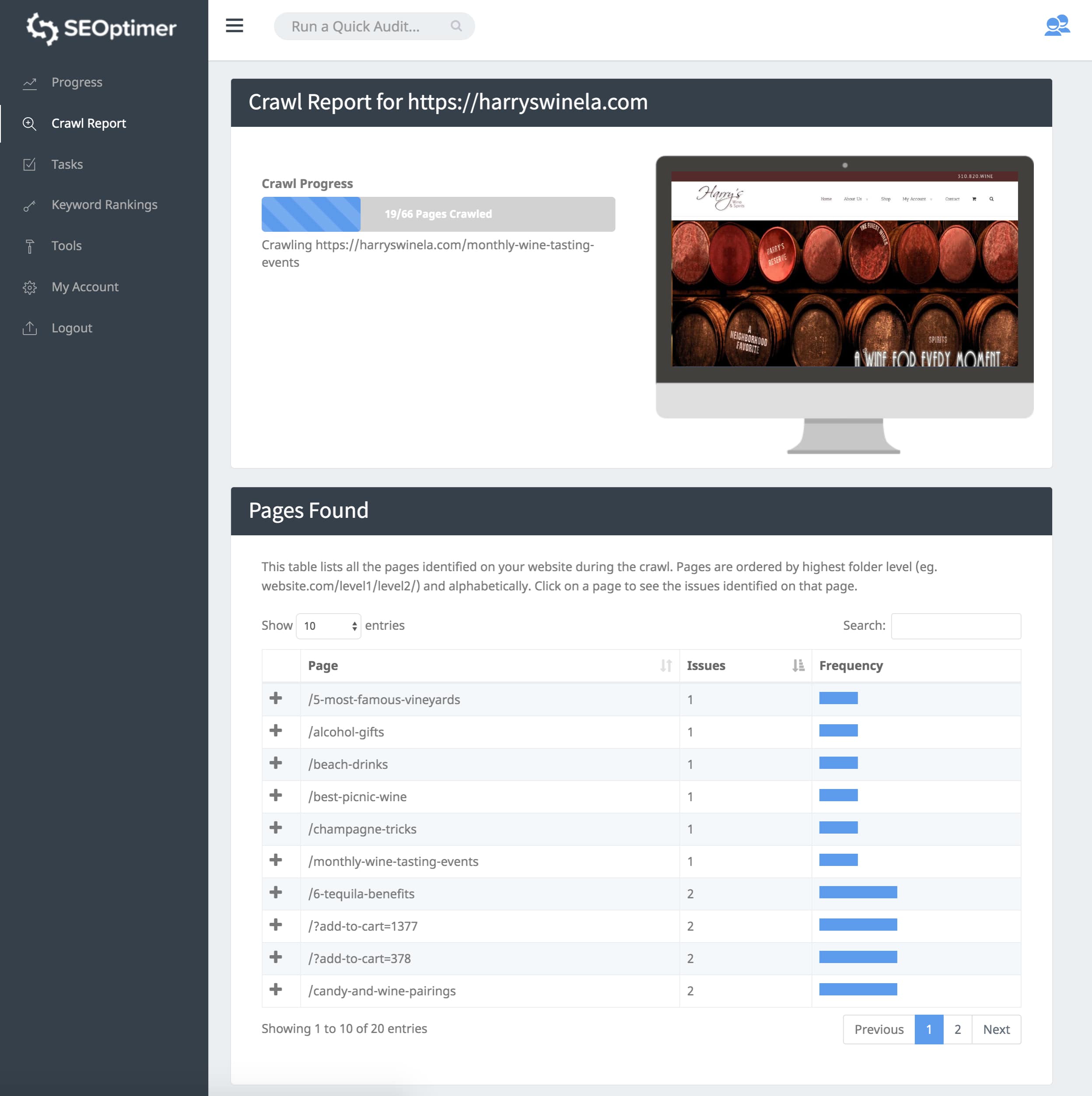

Top Web Crawler Tools

Best Tools for Crawling the Web

A variety of web crawler tools are available, each offering unique features and capabilities. Some of the most popular tools include:

- Google Search Console: Provides valuable insights into how Google crawls and indexes your website, helping you optimize your SEO strategy.

- Sitebulb: Offers detailed site audits and crawl data to assist with SEO optimization, enabling businesses to identify and address technical issues.

- Ahrefs: Features a powerful crawler for analyzing backlinks and competitors, empowering businesses to enhance their link-building strategies.

- Screaming Frog: A versatile tool for crawling large websites and identifying technical SEO issues, making it an essential resource for comprehensive site analysis.

Selecting the right tool depends on your specific needs and budget. Carefully evaluate each option to ensure it aligns with your requirements and objectives.

Web Crawlers and SEO

Optimizing for Search Engines

Web crawlers play a pivotal role in SEO by influencing how search engines perceive and rank websites. To optimize your site for crawlers, ensure that all pages are easily accessible through a clear navigation structure. Use descriptive URLs, meta tags, and alt text for images to provide context and enhance crawlability.

Additionally, consider implementing a sitemap to help crawlers discover all pages on your site. Regularly updating your content and addressing technical issues can further improve your site's crawlability and boost its search engine rankings, ensuring maximum visibility.

Best Practices for Using Web Crawlers

Maximizing Crawling Efficiency

To maximize the efficiency of web crawlers, adhere to the following best practices:

- Optimize Site Structure: Ensure a logical and hierarchical structure for easy navigation, making it simpler for crawlers to explore your site.

- Use Robots.txt: Control which pages crawlers can access using a robots.txt file, allowing you to manage crawl priorities effectively.

- Monitor Crawl Rate: Keep an eye on how frequently crawlers visit your site to avoid overloading your server and maintain optimal performance.

- Regular Audits: Conduct regular site audits to identify and fix technical issues, ensuring that your site remains fully crawlable and optimized.

By following these practices, you can ensure that web crawlers effectively index your site, improving its visibility and search engine rankings.

Data Privacy and Ethical Considerations

Respecting User Privacy

While web crawler tools are invaluable for SEO, it's crucial to consider data privacy and ethical implications. Crawlers must respect users' privacy by adhering to regulations such as the General Data Protection Regulation (GDPR) and the California Consumer Privacy Act (CCPA). This includes obtaining proper consent when collecting personal data and ensuring secure data storage.

Furthermore, businesses should be transparent about their data collection practices and provide users with options to opt out if desired. By prioritizing data privacy, companies can build trust with their audience and avoid potential legal challenges, fostering a positive relationship with their users.

The Future of Web Crawlers

Innovations in Crawling Technology

The future of web crawling is promising, with ongoing advancements in artificial intelligence and machine learning. These technologies are enhancing the capabilities of web crawlers, enabling them to process data more efficiently and accurately. For example, AI-powered crawlers can now understand context and semantics, delivering more relevant search results and improving user satisfaction.

As the internet continues to expand, the role of web crawlers will become increasingly critical. Businesses must stay informed about these developments and adapt their strategies to fully leverage the potential of crawling technology, ensuring they remain competitive in the digital landscape.

Conclusion

In summary, web crawler tools are indispensable for modern SEO strategies. By understanding their functionality and implementing best practices, businesses can enhance their website's visibility and drive more traffic. Remember to respect data privacy and ethical considerations when using web crawlers to build trust with your audience.

We invite you to share your thoughts and experiences with web crawler tools in the comments below. Additionally, feel free to explore our other articles for further insights into digital marketing and SEO. Together, let's unlock the full potential of web crawling and elevate your online presence to new heights!